Introduction cm

[ Back to index ]

Introduction to the MLCommons CM language

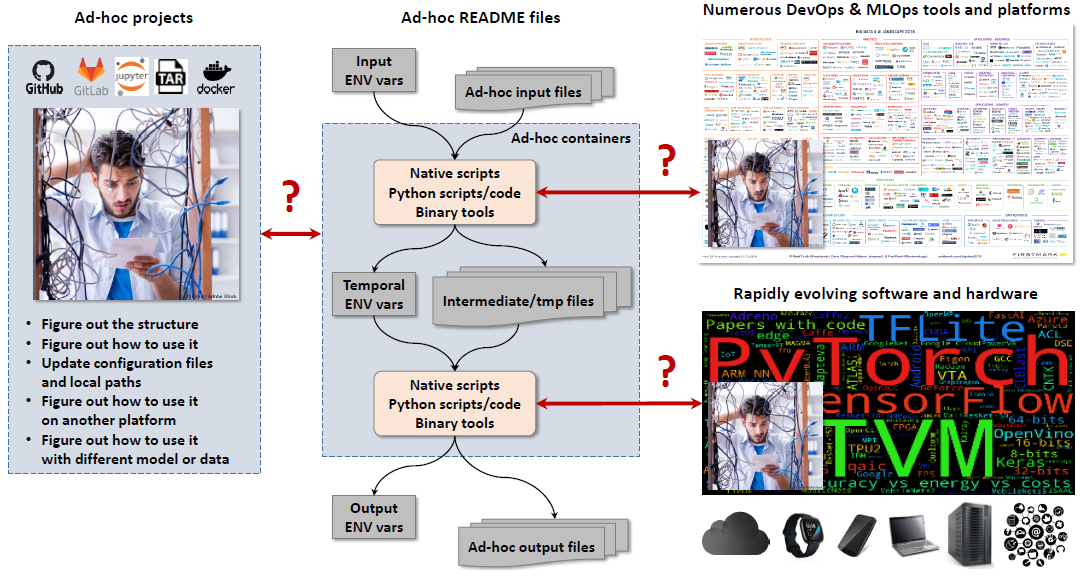

During the past 10 years, the community has considerably improved the reproducibility of experimental results from research projects and published papers by introducing the artifact evaluation process with a unified artifact appendix and reproducibility checklists, Jupyter notebooks, containers, and Git repositories.

On the other hand, our experience reproducing more than 150 papers revealed that it still takes weeks and months of painful and repetitive interactions between researchers and evaluators to reproduce experimental results.

This effort includes decrypting numerous README files, examining ad-hoc artifacts and containers, and figuring out how to reproduce computational results. Furthermore, snapshot containers pose a challenge to optimize algorithms' performance, accuracy, power consumption and operational costs across diverse and rapidly evolving software, hardware, and data used in the real world.

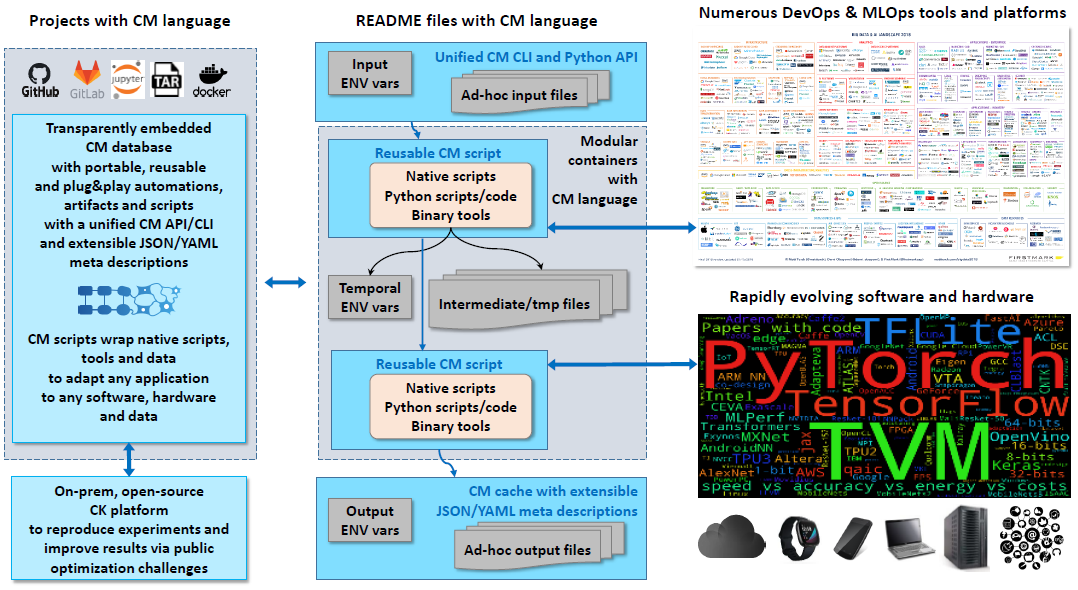

This practical experience and the feedback from the community motivated us to establish the MLCommons Task Force on Automation and Reproducibility and develop a light-weight, technology agnostic, and English-like workflow automation language called Collective Mind (MLCommons CM).

This language provides a common, non-intrusive and human-readable interface to any software project transforming it into a collection of reusable automation recipes (CM scripts). Following FAIR principles, CM automation actions and scripts are simple wrappers around existing user scripts and artifacts to make them * findable via human-readable tags, aliases and unique IDs; * accessible via a unified CM CLI and Python API with JSON/YAML meta descriptions; * interoperable and portable across any software, hardware, models and data; * reusable across all projects.

CM is written in simple Python and uses JSON and/or YAML meta descriptions with a unified CLI to minimize the learning curve and help researchers and practitioners describe, share, and reproduce experimental results in a unified, portable, and automated way across any rapidly evolving software, hardware, and data while solving the "dependency hell" and automatically generating unified README files and modular containers.

Our ultimate goal is to use CM language to facilitate reproducible research for AI, ML and systems projects, minimize manual and repetitive benchmarking and optimization efforts, and reduce time and costs when transferring technology to production across continuously changing software, hardware, models, and data.

Some projects supported by CM

- A unified way to run MLPerf inference benchmarks with different models, software and hardware. See current coverage.

- A unitied way to run MLPerf training benchmarks (prototyping phase)

- A unified way to run MLPerf tiny benchmarks (prototyping phase)

- A unified CM to run automotive benchmarks (prototyping phase)

- An open-source platform to aggregate, visualize and compare MLPerf results

- Leaderboard for community contributions

- Artifact Evaluation and reproducibility initiatives at ACM/IEEE/NeurIPS conferences:

- A unified way to run experiments and reproduce results from ACM/IEEE MICRO'23 and ASPLOS papers

- Student Cluster Competition at SuperComputing'23

- CM automation to reproduce IPOL paper

- Auto-generated READMEs to reproduce official MLPerf BERT inference benchmark v3.0 submission with a model from the Hugging Face Zoo

- Auto-generated Docker containers to run and reproduce MLPerf inference benchmark